Some time ago, I received a ticket from a customer to change an A record in a zone, a quick, easy thing to do. I made the change and told them that it was done. A few days later, I got a followup ticket saying “This doesn’t appear to be done.” I checked and, sure enough, the change didn’t appear when I queried the DNS servers. Apparently, in my haste, I had forgotten to do one simple but necessary step: increment the serial number in the zone file. Oops. Chagrined, I incremented the serial number, reloaded the zone file, verified that the change did indeed appear, and apologized profusely to the customer.

If only I tested the change before I decided I was done with the work, I could have found my error before my customer.

Mistakes like this don’t happen often, but they do happen. I know I have made many errors like this one throughout my time as a system administrator.

One Friday about a year ago, I handled a particularly hairy ticket. I spent the entire weekend half-expecting, dreading, I would get a phone call from the person on call telling me that I had screwed up. That call never came so I guess I did it right. On the following Monday, I decided to avoid weekends like that one and adopted a new policy: Test work I do before saying that I’m done. Before I do something, I would set up a test for the desired outcome, verify that the test fails, do the work, and then verify that the test passes.

An Example

To give you an idea of how this works, let’s do an example based on the scenario I mentioned earlier. Since I use Cucumber for my tests, I write a feature that looks like:

Feature: Change A record for foo.example.com

Scenario:

When I query for the A record for “foo.example.com”

Then I should get a response with the A record “10.1.1.2”

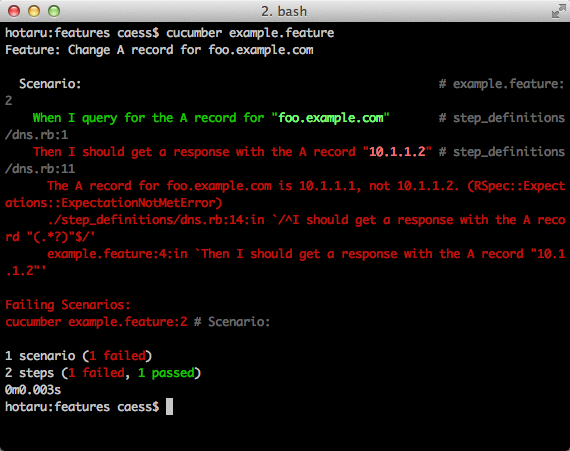

I then run this feature and verify that it fails:

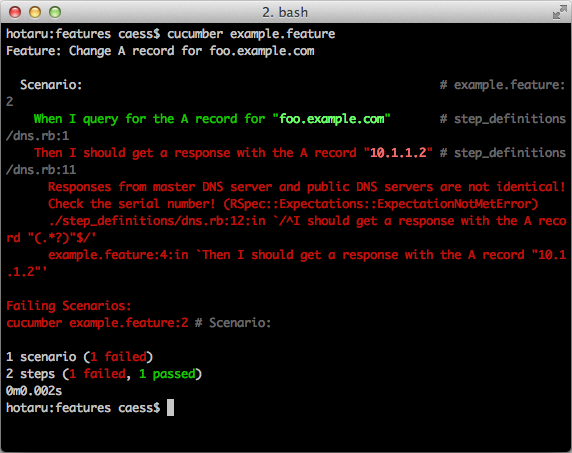

I make the change on the DNS server and run the test again:

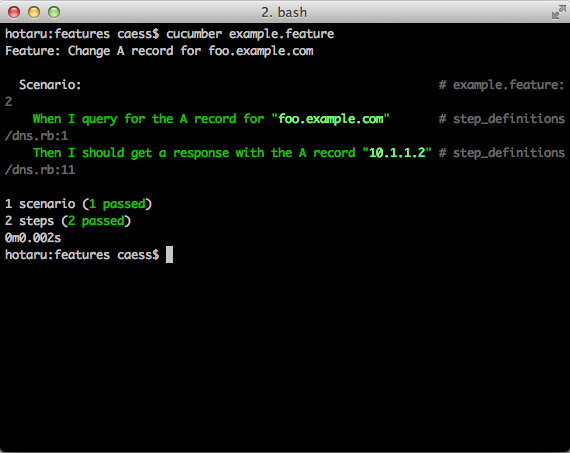

What? It failed? Oh, I didn’t increment the serial number again. Let’s fix that and:

It passed! That means I’m done and can tell that to my customer, confident that an error hasn’t slipped through.

What I’ve learned

Having done this for about a year, I’ve learned several things.

- There is a cost to testing. If I already have step definitions set up for my test, it only adds a few minutes. If I have to write new step definitions, it takes a lot longer. (Some step definitions, particularly those that have to scrape websites, have taken hours to write.) Even if it only takes a few minutes to set up and run the test, that’s still a few minutes.

- Some things cannot or should not be tested directly. In these cases, indirect tests are needed. Instead of verifying the desired behavior, you might need to test the configuration that specifies the desired behavior. Care must be taken when using indirect tests since they are not as reliable as direct tests.

For example, most tasks involving email delivery or routing should not be tested directly because emails sent for testing would be seen as unwanted noise by the customer or because you do not have the login credentials for the email account. If your customer wants to emails to foo@example.com forwarded to bar@example.com, you can test the mailserver configuration to verify that the forwarding is set up.

- Make sure that the test checks everything that is being done. (This is especially true for indirect tests.). If your test only checks part of the functionality, your test may pass but you’re not actually done. For example, if you’re supposed to set up a website that pulls data from a database, make sure you test for content that’s in the database. If you only test for the presence of a string from static content (say, from the template for the site), your test will pass even though you haven’t set up the database privileges correctly.

I believe in testing. Why should you?

Every error has costs. The obvious cost is time: It takes time to fix an error. This time may exceed the original time it took to do the work.

The more important cost is in customer respect and goodwill. Every error that your customers see takes away from the respect and goodwill you’ve built up. Sadly, it’s a lot easier to lose respect than to gain it. If your customers see enough errors from you that you deplete that respect, there will be consequences. Even if they don’t quit our services entirely, they are sure to tell their friends and colleagues that you’re incompetent, untrustworthy, and, perhaps most damning, unprofessional.

“But I don’t have time to test!” you might say. That’s precisely when you should be testing! If you’re doing everything at a breakneck pace, you’re sure to make mistakes. When you’re stressed and overloaded is precisely when you should slow down and do things carefully. Every error you catch now saves you from paying the cost of that error later.

How has this worked out over the past year?

Over the past year, I have tested most of the work I have done. Various reasons have prevented me from testing all of it.

I feel like my customer-visible error rate has decreased significantly. (I don’t have accurate statistics so I don’t know for sure.) Of all of the mistakes that were reported by my customers in the past year, only one has been for work I had tested and that was because the test was not complete. All other reported errors were for untested work. Said another way: My customer-visible error rate for properly tested work is 0%. I think that’s a pretty good track record.

Closing thoughts

Creating tests for checking your work will help you decrease your customer-visible error rate. They won't help you make less errors but they will help you prevent your customers from seeing them.

I recommend trying it out. Write a test for a particular work item, do it, make your test pass. Now do the same for your next work item. And then the one after that. And so on.